With growing needs of analysing vast scales of data, Big Data Engineering services have emerged as a separate stream along with Data Science. While Data Science deals with generating insights from data, Data Engineering deals with managing and preparing the data for business analysis.

BIG DATA Solutions

Data Engineering was originally limited to data management on traditional data platforms like RDBMS, Datawarehouses (DW), Datamarts, etc,. Data Architects designed and managed the data models, data governance, master data management and data security.

ETL Engineers used to manage data pipelines and Data Analysts mostly generated reports using basic SQL and reporting tools. The statisticians ran models on the traditional data sets. In the last decade or so, the growth of data volume has become exponential in most of the industries.

Traditional ETLs, Databases and statistical models couldn’t handle the volumes. Along with volume, there is an increasing need for analytics on variety of data, real-time data and quality of data. This triggered the need for Big Data platforms and Data Engineering for Big Data.

At Huge Tech, we leverage our end-to-end Big Data consulting services and Advanced Analytics solutions delivery expertise, to be the ideal Big Data service provider that will help meet your business objectives, time-to-market and TCO objectives.

Big Data engineering is a complex and constantly changing ecosystem, and choice of platform impacts the business value derived from your data. Huge Tech Solutions understands that even Top Enterprises would find it challenging to identify the right combination of tools & technologies from the explosion of choices available in the Big Data ecosystem.

Huge Tech Software’s Big Data Services

Equipped with a combination of Big Data Technology expertise, rich delivery experience and a highly capable workforce, Huge Tech Software is able to provide a wide portfolio of Big Data services to its clients.

The services will enable our clients to move forward with the Big Data & business analysis road-map and derive actionable insights so as to make quicker and learned decisions.

Huge Tech Software’s Big Data & Analytics Services will also help organizations improve efficiencies, reduce TCO and lower risk with commercial solutions.

These services are not only around Big Data but also on traditional platforms like enterprise data warehouse, BI, etc. Huge Tech Software has in its arsenal a well thought out reference architecture for Big Data Solutions which is flexible, scalable and robust.

There are standard frameworks that will be in use while executing these services. These services are provided on all big data tools & technologies. High level services include consulting, implementation, on-going maintenance & managed services.

Advisory/Consulting

- Assessment & Recommendations on Big Data Solutions

- Assessment & Recommendations on DW & BI Solutions

- Provide Strategic roadmap

- Business Intelligence (BI) & Big Data Maturity Assessment

- Proof Of Concept (POC), Pilot & Prototype

- Performance Engineering

- BI Modernization

Ongoing Management & Support

- Big Data Administration & Maintenance

- Cluster Setup

- Cluster Monitoring

- Backup & Recovery

- Version/Patch Management

- Performance Tuning

- Support

- DW & BI Platform Administration & Maintenance

Big Data Implementation

- Architecture & Design

- Build Big Data Solutions

- Data Lake

- DW/Datamart

- Cluster Setup – On Prem, Cloud and Hybrid

- Big Data Applications

- ETL, Data Pipelines & ELT

- Data Management – SQL & NO SQL

- BI & Visualization

- Data Governance

- Data Quality

- Master Data Management

- Metadata Management

- Migrations & Upgrades – Applications, Database

- Cloud – Migration & Onboarding

- Big Data Testing

- Big Data Security

- Delivery Methodologies – Agile, Waterfall, Iterative, DevOps

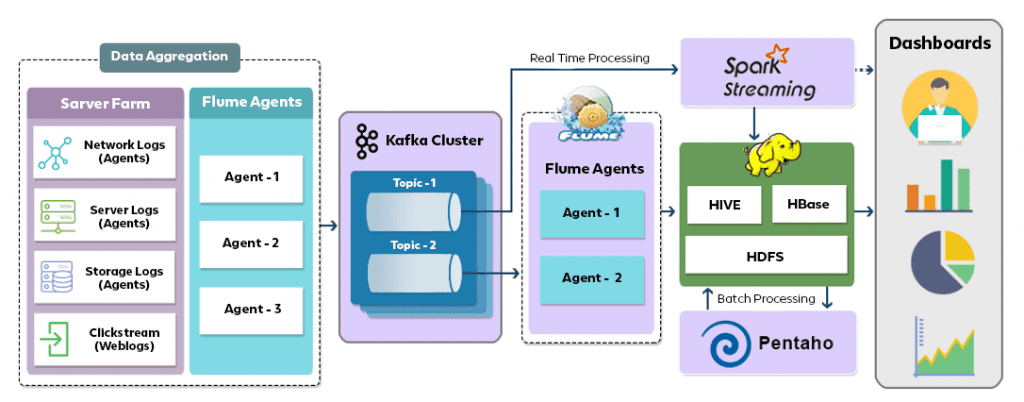

Big Data Stream Processing Architecture

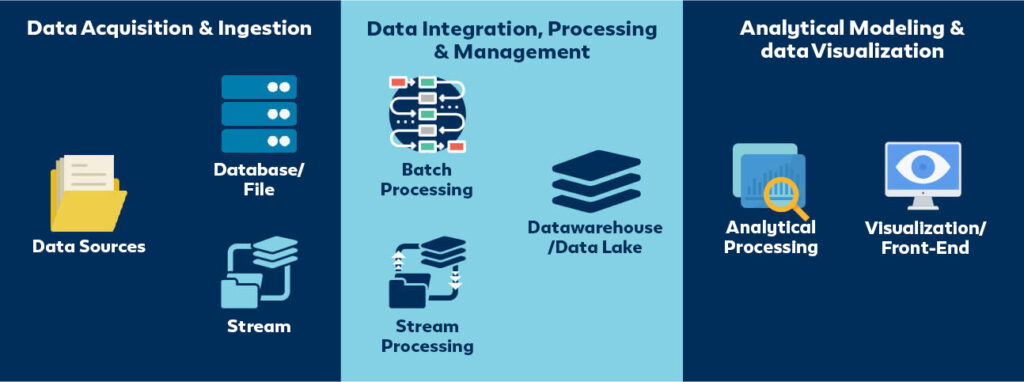

One of the key elements of a big data architecture is handling data in motion specifically streaming data. Unbounded streams of data are required to be captured, processed and analyzed. These streaming data sets could be processed & analysed in real-time or near real-time data depending on the business need.

The above diagram illustrates a big data stream processing architecture with sample technologies. The technology choices could be different based on various factors like cost, efficiency, open source, developer community, in-house, cloud ready, etc,. The stream processing process has 4 steps from capture to visualize:

Capture – Collection and aggregation of streams (in this case logs using Flume)

Transfer – Real-time data pipeline and movement (Kafka for real-time + Flume for batch)

Process – Real-time data processing (Spark) and batch processing on Hadoop using Pentaho

Visualize – Visualize real-time + batch processed data

Big Data Tools & Technology

|  |  |  |  |